그냥 공부용 정리

import importlib

from omegaconf import OmegaConfJson, yaml 등 각종 설정 파일 읽기 및 생성, 변경 작업 지원

from pytorch_lightning import seed_everything

from ldm.data.base import Txt2ImgIterableBaseDataset미리 정의해 둔 데이터셋 형식

class Txt2ImgIterableBaseDataset(IterableDataset):

'''

Define an interface to make the IterableDatasets for text2img data chainable

'''

def __init__(self, num_records=0, valid_ids=None, size=256):

super().__init__()

self.num_records = num_records

self.valid_ids = valid_ids

self.sample_ids = valid_ids

self.size = size

print(f'{self.__class__.__name__} dataset contains {self.__len__()} examples.')

def __len__(self):

return self.num_records

@abstractmethod

def __iter__(self):

passfrom ldm.util import instantiate_from_config명시되어 있는 모듈과 피라미터로 인스턴스를 생성

def instantiate_from_config(config):

if not "target" in config:

if config == '__is_first_stage__':

return None

elif config == "__is_unconditional__":

return None

raise KeyError("Expected key `target` to instantiate.")

return get_obj_from_str(config["target"])(**config.get("params", dict()))

def get_obj_from_str(string, reload=False):

module, cls = string.rsplit(".", 1)

if reload:

module_imp = importlib.import_module(module)

importlib.reload(module_imp)

return getattr(importlib.import_module(module, package=None), cls)예시 :

def nondefault_trainer_args(opt):

parser = argparse.ArgumentParser()

parser = Trainer.add_argparse_args(parser)

args = parser.parse_args([])

return sorted(k for k in vars(args) if getattr(opt, k) != getattr(args, k))Trainer의 default 값이 opt와 다르면 해당 key를 반환

def worker_init_fn(_):

worker_info = torch.utils.data.get_worker_info()

dataset = worker_info.dataset

worker_id = worker_info.id

if isinstance(dataset, Txt2ImgIterableBaseDataset):

split_size = dataset.num_records // worker_info.num_workers

# reset num_records to the true number to retain reliable length information

dataset.sample_ids = dataset.valid_ids[worker_id * split_size:(worker_id + 1) * split_size]

current_id = np.random.choice(len(np.random.get_state()[1]), 1)

return np.random.seed(np.random.get_state()[1][current_id] + worker_id)

else:

return np.random.seed(np.random.get_state()[1][0] + worker_id)데이터셋 초기화를 위한 정보 얻기.

Txt2ImgIterableBaseDataset일 경우 내부값까지 초기화.

class DataModuleFromConfig(pl.LightningDataModule):

def __init__(self, batch_size, train=None, validation=None, test=None, predict=None,

wrap=False, num_workers=None, shuffle_test_loader=False, use_worker_init_fn=False,

shuffle_val_dataloader=False):

super().__init__()

self.batch_size = batch_size

self.dataset_configs = dict()

self.num_workers = num_workers if num_workers is not None else batch_size * 2

self.use_worker_init_fn = use_worker_init_fn

if train is not None:

self.dataset_configs["train"] = train

self.train_dataloader = self._train_dataloader

if validation is not None:

...

if test is not None:

...

if predict is not None:

...

self.wrap = wrap

def prepare_data(self):

for data_cfg in self.dataset_configs.values():

instantiate_from_config(data_cfg)

def setup(self, stage=None):

self.datasets = dict(

(k, instantiate_from_config(self.dataset_configs[k]))

for k in self.dataset_configs)

if self.wrap:

for k in self.datasets:

self.datasets[k] = WrappedDataset(self.datasets[k])

def _train_dataloader(self):

is_iterable_dataset = isinstance(self.datasets['train'], Txt2ImgIterableBaseDataset)

if is_iterable_dataset or self.use_worker_init_fn:

init_fn = worker_init_fn

else:

init_fn = None

return DataLoader(self.datasets["train"], batch_size=self.batch_size,

num_workers=self.num_workers, shuffle=False if is_iterable_dataset else True,

worker_init_fn=init_fn)

def _val_dataloader(self, shuffle=False):

if isinstance(self.datasets['validation'], Txt2ImgIterableBaseDataset) or self.use_worker_init_fn:

init_fn = worker_init_fn

else:

init_fn = None

return DataLoader(self.datasets["validation"],

batch_size=self.batch_size,

num_workers=self.num_workers,

worker_init_fn=init_fn,

shuffle=shuffle)

def _test_dataloader(self, shuffle=False):

...

return DataLoader(...)

def _predict_dataloader(self, shuffle=False):

...

return DataLoader(...)Pytorch lightning의 LightningDataModule로 Dataloader 정의

class SetupCallback(Callback):

def __init__(self, resume, now, logdir, ckptdir, cfgdir, config, lightning_config):

super().__init__()

self.resume = resume

self.now = now

self.logdir = logdir

self.ckptdir = ckptdir

self.cfgdir = cfgdir

self.config = config

self.lightning_config = lightning_config

def on_keyboard_interrupt(self, trainer, pl_module):

if trainer.global_rank == 0:

...

def on_pretrain_routine_start(self, trainer, pl_module):

if trainer.global_rank == 0:

...

각종 설정을 기록하고 저장하는 pytorch lightning callback.

class ImageLogger(Callback):

def __init__(self, batch_frequency, max_images, clamp=True, increase_log_steps=True,

rescale=True, disabled=False, log_on_batch_idx=False, log_first_step=False,

log_images_kwargs=None):

super().__init__()

self.rescale = rescale

self.batch_freq = batch_frequency

self.max_images = max_images

self.logger_log_images = {

pl.loggers.TestTubeLogger: self._testtube,

}

self.log_steps = [2 ** n for n in range(int(np.log2(self.batch_freq)) + 1)]

if not increase_log_steps:

self.log_steps = [self.batch_freq]

self.clamp = clamp

self.disabled = disabled

self.log_on_batch_idx = log_on_batch_idx

self.log_images_kwargs = log_images_kwargs if log_images_kwargs else {}

self.log_first_step = log_first_step

@rank_zero_only

#이미지 기록

def _testtube(self, pl_module, images, batch_idx, split):

for k in images:

grid = torchvision.utils.make_grid(images[k])

grid = (grid + 1.0) / 2.0 # -1,1 -> 0,1; c,h,w

tag = f"{split}/{k}"

pl_module.logger.experiment.add_image(

tag, grid,

global_step=pl_module.global_step)

@rank_zero_only

#이미지 저장

def log_local(self, save_dir, split, images,

global_step, current_epoch, batch_idx):

root = os.path.join(save_dir, "images", split)

for k in images:

grid = torchvision.utils.make_grid(images[k], nrow=4)

if self.rescale:

grid = (grid + 1.0) / 2.0 # -1,1 -> 0,1; c,h,w

grid = grid.transpose(0, 1).transpose(1, 2).squeeze(-1)

grid = grid.numpy()

grid = (grid * 255).astype(np.uint8)

filename = "{}_gs-{:06}_e-{:06}_b-{:06}.png".format(

k,

global_step,

current_epoch,

batch_idx)

path = os.path.join(root, filename)

os.makedirs(os.path.split(path)[0], exist_ok=True)

Image.fromarray(grid).save(path)

#eval -> 로그 기록, 저장 -> train

def log_img(self, pl_module, batch, batch_idx, split="train"):

check_idx = batch_idx if self.log_on_batch_idx else pl_module.global_step

if (self.check_frequency(check_idx) and # batch_idx % self.batch_freq == 0

hasattr(pl_module, "log_images") and

callable(pl_module.log_images) and

self.max_images > 0):

logger = type(pl_module.logger)

is_train = pl_module.training

if is_train:

pl_module.eval()

with torch.no_grad():

images = pl_module.log_images(batch, split=split, **self.log_images_kwargs)

for k in images:

N = min(images[k].shape[0], self.max_images)

images[k] = images[k][:N]

if isinstance(images[k], torch.Tensor):

images[k] = images[k].detach().cpu()

if self.clamp:

images[k] = torch.clamp(images[k], -1., 1.)

self.log_local(pl_module.logger.save_dir, split, images,

pl_module.global_step, pl_module.current_epoch, batch_idx)

logger_log_images = self.logger_log_images.get(logger, lambda *args, **kwargs: None)

logger_log_images(pl_module, images, pl_module.global_step, split)

if is_train:

pl_module.train()

def check_frequency(self, check_idx):

if ((check_idx % self.batch_freq) == 0 or (check_idx in self.log_steps)) and (

check_idx > 0 or self.log_first_step):

try:

self.log_steps.pop(0)

except IndexError as e:

print(e)

pass

return True

return False

#배치가 끝날때마다 로깅

def on_train_batch_end(self, trainer, pl_module, outputs, batch, batch_idx, dataloader_idx):

if not self.disabled and (pl_module.global_step > 0 or self.log_first_step):

self.log_img(pl_module, batch, batch_idx, split="train")

def on_validation_batch_end(self, trainer, pl_module, outputs, batch, batch_idx, dataloader_idx):

if not self.disabled and pl_module.global_step > 0:

self.log_img(pl_module, batch, batch_idx, split="val")

if hasattr(pl_module, 'calibrate_grad_norm'):

if (pl_module.calibrate_grad_norm and batch_idx % 25 == 0) and batch_idx > 0:

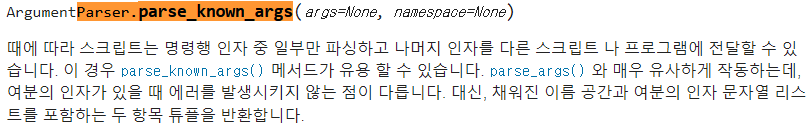

self.log_gradients(trainer, pl_module, batch_idx=batch_idx)opt, unknown = parser.parse_known_args()

def divein(*args, **kwargs):

if trainer.global_rank == 0:

import pudb;

pudb.set_trace()pdb = 파이썬 디버거, pudb = 콘솔 기반 파이썬 디버거

try:

...

# run

if opt.train:

try:

trainer.fit(model, data)

except Exception:

melk()

raise

if not opt.no_test and not trainer.interrupted:

trainer.test(model, data)

except Exception:

if opt.debug and trainer.global_rank == 0:

try:

import pudb as debugger

except ImportError:

import pdb as debugger

debugger.post_mortem()

raise학습 중 에러 발생 시 체크포인트 저장하고 디버깅

'코드 리뷰 > Diffusion' 카테고리의 다른 글

| DiffStyler 코드 리뷰 (0) | 2023.01.16 |

|---|---|

| Paint by Example 코드 리뷰 (1) | 2023.01.15 |

| DAAM 코드 리뷰 (1) | 2023.01.12 |

| Latent Diffusion (0) | 2022.12.28 |

| Classifier-Guidance Diffusion (1) | 2022.12.07 |

| Improved DDPM (0) | 2022.09.28 |